https://www.jianshu.com/p/3c13017ad492

https://github.com/tomisacat/VideoToolboxCompression

https://blog.csdn.net/meiwenjie110/article/details/69524432

从iOS8开始,苹果将VideoToolbox.framework开放了出来,使开发者可以使用iOS设备内置的硬件设备来进行视频的编码和解码工作。硬件编解码的好处是,复杂的计算由专门的硬件电路完成,往往比使用cpu计算更高效,速度更快,功耗也更低。H.264是目前很流行的编码层视频压缩格式,目前项目中的协议层有rtmp与http,但是视频的编码层都是使用的H.264。

关于H.264编码格式相关知识请看深入浅出理解视频编码H264结构本篇不再赘述。在iOS平台上对视频数据进行H.264编码有两种方式:

- 软件编码:用ffmpeg等开源库进行编码,他是用cpu进行相关计算的,效率比较低,但是比较通用,是跨平台的。

- 硬件编码:用VideoToolbox今天编码,他是用GPU进行相关计算的,效率很高。

在熟悉H.264的过程中,为更好的了解H.264,尝试用VideoToolbox硬编码与硬解码H.264的原始码流。

今天我们主要来看看使用VideoToolbox硬编码H.264。

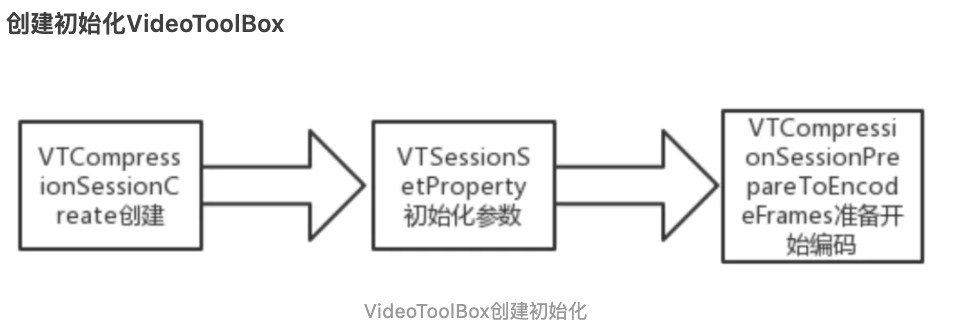

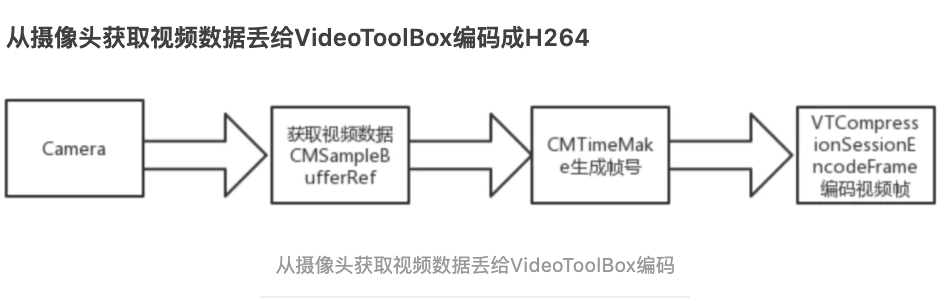

用VideoToolbox硬编码H.264步骤如下:

1.初始化摄像头,output设定的时候,需要设置delegate和输出队列。在delegate方法,处理采集好的图像。

2.初始化VideoToolbox,设置各种属性。

3.获取每一帧数并编码。

4.每一帧数据编码完成后,在回调方法中判断是不是关键帧,如果是关键帧需要用CMSampleBufferGetFormatDescription获取CMFormatDescriptionRef,然后用

CMVideoFormatDescriptionGetH264ParameterSetAtIndex取得PPS和SPS;最后把每一帧的所有NALU数据前四个字节变成0x00 00 00 01之后再写入文件。

5.循环步骤3步骤4。

6.调用VTCompressionSessionCompleteFrames完成编码,然后销毁session:VTCompressionSessionInvalidate,释放session。

Sample代码

事实上,使用 VideoToolbox 硬编码的用途大多是推流编码后的 NAL Unit 而不是写入到本地一个 H.264 文件// 如果你想保存到本地,使用 AVAssetWriter 是一个更好的选择,它内部也是会硬编码的。

ViewController.swift

//

// ViewController.swift

// VideoToolboxCompression

//

// Created by tomisacat on 12/08/2017.

// Copyright © 2017 tomisacat. All rights reserved.

//

import UIKit

import AVFoundation

import VideoToolbox

fileprivate var NALUHeader: [UInt8] = [0, 0, 0, 1]

// 事实上,使用 VideoToolbox 硬编码的用途大多是推流编码后的 NAL Unit 而不是写入到本地一个 H.264 文件

// 如果你想保存到本地,使用 AVAssetWriter 是一个更好的选择,它内部也是会硬编码的

func compressionOutputCallback(outputCallbackRefCon: UnsafeMutableRawPointer?,

sourceFrameRefCon: UnsafeMutableRawPointer?,

status: OSStatus,

infoFlags: VTEncodeInfoFlags,

sampleBuffer: CMSampleBuffer?) -> Swift.Void {

guard status == noErr else {

print("error: \(status)")

return

}

if infoFlags == .frameDropped {

print("frame dropped")

return

}

guard let sampleBuffer = sampleBuffer else {

print("sampleBuffer is nil")

return

}

if CMSampleBufferDataIsReady(sampleBuffer) != true {

print("sampleBuffer data is not ready")

return

}

// let desc = CMSampleBufferGetFormatDescription(sampleBuffer)

// let extensions = CMFormatDescriptionGetExtensions(desc!)

// print("extensions: \(extensions!)")

//

// let sampleCount = CMSampleBufferGetNumSamples(sampleBuffer)

// print("sample count: \(sampleCount)")

//

// let dataBuffer = CMSampleBufferGetDataBuffer(sampleBuffer)!

// var length: Int = 0

// var dataPointer: UnsafeMutablePointer<Int8>?

// CMBlockBufferGetDataPointer(dataBuffer, 0, nil, &length, &dataPointer)

// print("length: \(length), dataPointer: \(dataPointer!)")

let vc: ViewController = Unmanaged.fromOpaque(outputCallbackRefCon!).takeUnretainedValue()

if let attachments = CMSampleBufferGetSampleAttachmentsArray(sampleBuffer, true) {

print("attachments: \(attachments)")

let rawDic: UnsafeRawPointer = CFArrayGetValueAtIndex(attachments, 0)

let dic: CFDictionary = Unmanaged.fromOpaque(rawDic).takeUnretainedValue()

// if not contains means it's an IDR frame

let keyFrame = !CFDictionaryContainsKey(dic, Unmanaged.passUnretained(kCMSampleAttachmentKey_NotSync).toOpaque())

if keyFrame {

print("IDR frame")

// sps

let format = CMSampleBufferGetFormatDescription(sampleBuffer)

var spsSize: Int = 0

var spsCount: Int = 0

var nalHeaderLength: Int32 = 0

var sps: UnsafePointer<UInt8>?

if CMVideoFormatDescriptionGetH264ParameterSetAtIndex(format!,

0,

&sps,

&spsSize,

&spsCount,

&nalHeaderLength) == noErr {

print("sps: \(String(describing: sps)), spsSize: \(spsSize), spsCount: \(spsCount), NAL header length: \(nalHeaderLength)")

// pps

var ppsSize: Int = 0

var ppsCount: Int = 0

var pps: UnsafePointer<UInt8>?

if CMVideoFormatDescriptionGetH264ParameterSetAtIndex(format!,

1,

&pps,

&ppsSize,

&ppsCount,

&nalHeaderLength) == noErr {

print("sps: \(String(describing: pps)), spsSize: \(ppsSize), spsCount: \(ppsCount), NAL header length: \(nalHeaderLength)")

let spsData: NSData = NSData(bytes: sps, length: spsSize)

let ppsData: NSData = NSData(bytes: pps, length: ppsSize)

// save sps/pps to file

// NOTE: 事实上,大多数情况下 sps/pps 不变/变化不大 或者 变化对视频数据产生的影响很小,

// 因此,多数情况下你都可以只在文件头写入或视频流开头传输 sps/pps 数据

vc.handle(sps: spsData, pps: ppsData)

}

}

} // end of handle sps/pps

// handle frame data

guard let dataBuffer = CMSampleBufferGetDataBuffer(sampleBuffer) else {

return

}

var lengthAtOffset: Int = 0

var totalLength: Int = 0

var dataPointer: UnsafeMutablePointer<Int8>?

if CMBlockBufferGetDataPointer(dataBuffer, 0, &lengthAtOffset, &totalLength, &dataPointer) == noErr {

var bufferOffset: Int = 0

let AVCCHeaderLength = 4

while bufferOffset < (totalLength - AVCCHeaderLength) {

var NALUnitLength: UInt32 = 0

// first four character is NALUnit length

memcpy(&NALUnitLength, dataPointer?.advanced(by: bufferOffset), AVCCHeaderLength)

// big endian to host endian. in iOS it's little endian

NALUnitLength = CFSwapInt32BigToHost(NALUnitLength)

let data: NSData = NSData(bytes: dataPointer?.advanced(by: bufferOffset + AVCCHeaderLength), length: Int(NALUnitLength))

vc.encode(data: data, isKeyFrame: keyFrame)

// move forward to the next NAL Unit

bufferOffset += Int(AVCCHeaderLength)

bufferOffset += Int(NALUnitLength)

}

}

}

}

class ViewController: UIViewController {

let captureSession = AVCaptureSession()

let captureQueue = DispatchQueue(label: "videotoolbox.compression.capture")

let compressionQueue = DispatchQueue(label: "videotoolbox.compression.compression")

lazy var preview: AVCaptureVideoPreviewLayer = {

let preview = AVCaptureVideoPreviewLayer(session: self.captureSession)

preview.videoGravity = .resizeAspectFill

view.layer.addSublayer(preview)

return preview

}()

var compressionSession: VTCompressionSession?

var fileHandler: FileHandle?

var isCapturing: Bool = false

override func viewDidLoad() {

super.viewDidLoad()

let path = NSTemporaryDirectory() + "/temp.h264"

try? FileManager.default.removeItem(atPath: path)

if FileManager.default.createFile(atPath: path, contents: nil, attributes: nil) {

fileHandler = FileHandle(forWritingAtPath: path)

}

let device = AVCaptureDevice.default(for: .video)!

let input = try! AVCaptureDeviceInput(device: device)

if captureSession.canAddInput(input) {

captureSession.addInput(input)

}

captureSession.sessionPreset = .high

let output = AVCaptureVideoDataOutput()

// YUV 420v

output.videoSettings = [kCVPixelBufferPixelFormatTypeKey as String: kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange]

output.setSampleBufferDelegate(self, queue: captureQueue)

if captureSession.canAddOutput(output) {

captureSession.addOutput(output)

}

// not a good method

if let connection = output.connection(with: .video) {

if connection.isVideoOrientationSupported {

connection.videoOrientation = AVCaptureVideoOrientation(rawValue: UIApplication.shared.statusBarOrientation.rawValue)!

}

}

captureSession.startRunning()

}

override func viewDidLayoutSubviews() {

preview.frame = view.bounds

let button = UIButton(type: .roundedRect)

button.setTitle("Click Me", for: .normal)

button.backgroundColor = .red

button.addTarget(self, action: #selector(startOrNot), for: .touchUpInside)

button.frame = CGRect(x: 100, y: 200, width: 100, height: 40)

view.addSubview(button)

}

}

extension ViewController {

@objc func startOrNot() {

if isCapturing {

stopCapture()

} else {

startCapture()

}

}

func startCapture() {

isCapturing = true

}

func stopCapture() {

isCapturing = false

guard let compressionSession = compressionSession else {

return

}

VTCompressionSessionCompleteFrames(compressionSession, kCMTimeInvalid)

VTCompressionSessionInvalidate(compressionSession)

self.compressionSession = nil

}

}

extension ViewController: AVCaptureVideoDataOutputSampleBufferDelegate {

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

guard let pixelbuffer = CMSampleBufferGetImageBuffer(sampleBuffer) else {

return

}

// if CVPixelBufferIsPlanar(pixelbuffer) {

// print("planar: \(CVPixelBufferGetPixelFormatType(pixelbuffer))")

// }

//

// var desc: CMFormatDescription?

// CMVideoFormatDescriptionCreateForImageBuffer(kCFAllocatorDefault, pixelbuffer, &desc)

// let extensions = CMFormatDescriptionGetExtensions(desc!)

// print("extensions: \(extensions!)")

if compressionSession == nil {

let width = CVPixelBufferGetWidth(pixelbuffer)

let height = CVPixelBufferGetHeight(pixelbuffer)

print("width: \(width), height: \(height)")

VTCompressionSessionCreate(kCFAllocatorDefault,

Int32(width),

Int32(height),

kCMVideoCodecType_H264,

nil, nil, nil,

compressionOutputCallback,

UnsafeMutableRawPointer(Unmanaged.passUnretained(self).toOpaque()),

&compressionSession)

guard let c = compressionSession else {

return

}

// set profile to Main

VTSessionSetProperty(c, kVTCompressionPropertyKey_ProfileLevel, kVTProfileLevel_H264_Main_AutoLevel)

// capture from camera, so it's real time

VTSessionSetProperty(c, kVTCompressionPropertyKey_RealTime, true as CFTypeRef)

// 关键帧间隔

VTSessionSetProperty(c, kVTCompressionPropertyKey_MaxKeyFrameInterval, 10 as CFTypeRef)

// 比特率和速率

VTSessionSetProperty(c, kVTCompressionPropertyKey_AverageBitRate, width * height * 2 * 32 as CFTypeRef)

VTSessionSetProperty(c, kVTCompressionPropertyKey_DataRateLimits, [width * height * 2 * 4, 1] as CFArray)

VTCompressionSessionPrepareToEncodeFrames(c)

}

guard let c = compressionSession else {

return

}

guard isCapturing else {

return

}

compressionQueue.sync {

pixelbuffer.lock(.readwrite) {

let presentationTimestamp = CMSampleBufferGetOutputPresentationTimeStamp(sampleBuffer)

let duration = CMSampleBufferGetOutputDuration(sampleBuffer)

VTCompressionSessionEncodeFrame(c, pixelbuffer, presentationTimestamp, duration, nil, nil, nil)

}

}

}

func handle(sps: NSData, pps: NSData) {

guard let fh = fileHandler else {

return

}

let headerData: NSData = NSData(bytes: NALUHeader, length: NALUHeader.count)

fh.write(headerData as Data)

fh.write(sps as Data)

fh.write(headerData as Data)

fh.write(pps as Data)

}

func encode(data: NSData, isKeyFrame: Bool) {

guard let fh = fileHandler else {

return

}

let headerData: NSData = NSData(bytes: NALUHeader, length: NALUHeader.count)

fh.write(headerData as Data)

fh.write(data as Data)

}

}

CVPixelBuffer+Extension.swift

//

// CVPixelBuffer+Extension.swift

// VideoToolboxCompression

//

// Created by tomisacat on 14/08/2017.

// Copyright © 2017 tomisacat. All rights reserved.

//

import Foundation

import VideoToolbox

import CoreVideo

extension CVPixelBuffer {

public enum LockFlag {

case readwrite

case readonly

func flag() -> CVPixelBufferLockFlags {

switch self {

case .readonly:

return .readOnly

default:

return CVPixelBufferLockFlags.init(rawValue: 0)

}

}

}

public func lock(_ flag: LockFlag, closure: (() -> Void)?) {

if CVPixelBufferLockBaseAddress(self, flag.flag()) == kCVReturnSuccess {

if let c = closure {

c()

}

}

CVPixelBufferUnlockBaseAddress(self, flag.flag())

}

}