iPhone利用AVFoundation录像时,Default生成H265的视频文件,这个文件只在Safari浏览器中能直接解码,如果想要在谷歌,火狐浏览器直接播放的话,需要转成H264的编码,IOS提供了AVAssetWriter可以直接生成H264的视频文件。

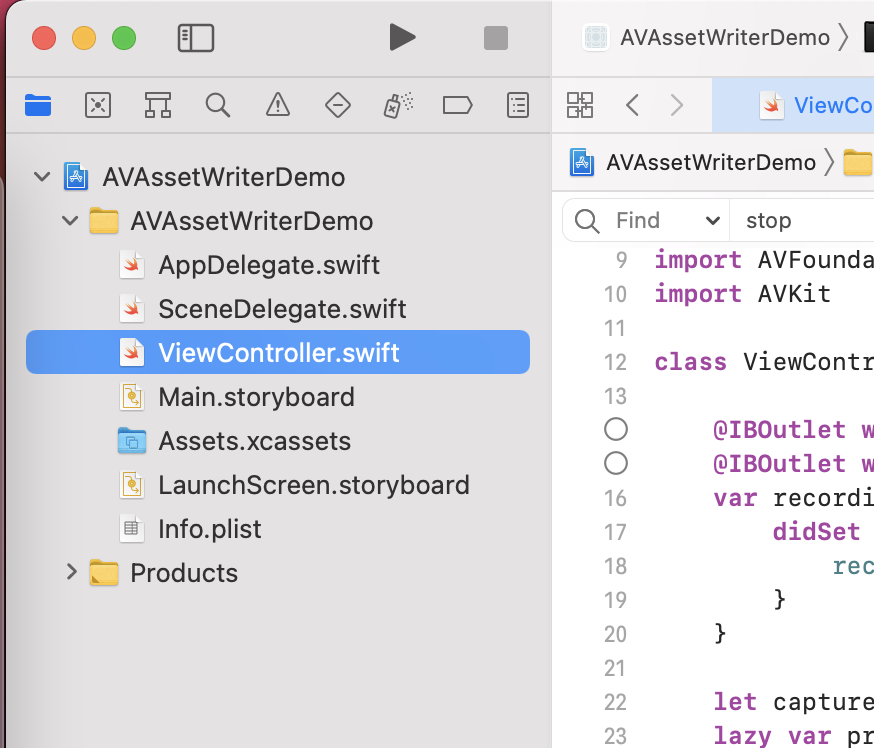

Demo1:以下是AVAssetWriterDemo:https://github.com/yinweisu/AVAssetWriterDemo

这个Demo没有捕捉音频,没有声音,可以看第二个Demo。

- ViewController.swift

//

// ViewController.swift

// AVAssetWriterDemo

//

// Created by Weisu Yin on 5/6/20.

// Copyright © 2020 UCDavis. All rights reserved.

import UIKit

import AVFoundation

import AVKit

class ViewController: UIViewController, AVCaptureVideoDataOutputSampleBufferDelegate {

@IBOutlet weak var previewView: UIView!

@IBOutlet weak var recordButton: UIButton!

var recording = false {

didSet {

recording ? self.start() : self.stop()

}

}

let captureSession = AVCaptureSession()

lazy var previewLayer = AVCaptureVideoPreviewLayer(session: self.captureSession)

var videoDataOutput: AVCaptureVideoDataOutput?

var assetWriter: AVAssetWriter?

var assetWriterInput: AVAssetWriterInput?

var filePath: URL?

var sessionAtSourceTime: CMTime?

override func viewDidLoad() {

super.viewDidLoad()

self.recording = false

self.requestCameraPermission()

}

@IBAction func recordButtonPressed(_ sender: Any) {

recording.toggle()

}

@IBAction func playRecordedVideo(_ sender: Any) {

guard let url = filePath else {

print("Can't get video url")

return

}

let player = AVPlayer(url: url)

let playerController = AVPlayerViewController()

playerController.player = player

present(playerController, animated: true) {

player.play()

}

}

// 判断摄像头权限

func requestCameraPermission() {

switch AVCaptureDevice.authorizationStatus(for: .video) {

case .authorized:

self.setupCaptureSession()

case .notDetermined:

AVCaptureDevice.requestAccess(for: .video) { granted in

if granted {

DispatchQueue.main.async {

self.setupCaptureSession()

}

}

}

case .denied:

return

case .restricted:

return

@unknown default:

fatalError()

}

}

// 初始化 CaptureSession

func setupCaptureSession() {

self.captureSession.beginConfiguration()

guard let videoCaptureDevice = AVCaptureDevice.default(for: .video) else { return }

// 添加视频 输入设备

guard let videoDeviceInput = try? AVCaptureDeviceInput(device: videoCaptureDevice), self.captureSession.canAddInput(videoDeviceInput)

else { return }

self.captureSession.addInput(videoDeviceInput)

// 设定输出是AVCaptureVideoDataOutput类型

let tempVideoDataOutput = AVCaptureVideoDataOutput()

tempVideoDataOutput.alwaysDiscardsLateVideoFrames = true

tempVideoDataOutput.videoSettings = [kCVPixelBufferPixelFormatTypeKey as String: Int(kCVPixelFormatType_420YpCbCr8BiPlanarFullRange)]

tempVideoDataOutput.setSampleBufferDelegate(self, queue: DispatchQueue(label: "videoQueue"))

self.captureSession.addOutput(tempVideoDataOutput)

self.captureSession.commitConfiguration()

self.captureSession.startRunning()

self.videoDataOutput = tempVideoDataOutput

self.previewLayer.frame = self.previewView.frame

self.previewLayer.videoGravity = AVLayerVideoGravity.resizeAspectFill

self.view.layer.addSublayer(previewLayer)

self.setUpWriter()

}

// This mothod will overwrite previous video files

func videoFileLocation() -> URL {

let documentsPath = NSSearchPathForDirectoriesInDomains(.documentDirectory, .userDomainMask, true)[0] as NSString

let videoOutputUrl = URL(fileURLWithPath: documentsPath.appendingPathComponent("videoFile")).appendingPathExtension("mov")

do {

if FileManager.default.fileExists(atPath: videoOutputUrl.path) {

try FileManager.default.removeItem(at: videoOutputUrl)

print("file removed")

}

} catch {

print(error)

}

return videoOutputUrl

}

// 设置AVAssetWriter

func setUpWriter() {

do {

filePath = videoFileLocation()

assetWriter = try AVAssetWriter(outputURL: filePath!, fileType: AVFileType.mov)

// add video input

let settings = self.videoDataOutput?.recommendedVideoSettingsForAssetWriter(writingTo: .mp4)

assetWriterInput = AVAssetWriterInput(mediaType: .video, outputSettings: [

AVVideoCodecKey : AVVideoCodecType.h264,

AVVideoWidthKey : 720,

AVVideoHeightKey : 1280,

AVVideoCompressionPropertiesKey : [

AVVideoAverageBitRateKey : 2300000,

],

])

guard let assetWriterInput = assetWriterInput, let assetWriter = assetWriter else { return }

assetWriterInput.expectsMediaDataInRealTime = true

// assetWriterInput.transform = CGAffineTransform(rotationAngle: .pi/2) // Adapt to portrait mode

if assetWriter.canAdd(assetWriterInput) {

assetWriter.add(assetWriterInput)

print("asset input added")

} else {

print("no input added")

}

assetWriter.startWriting()

self.assetWriter = assetWriter

self.assetWriterInput = assetWriterInput

} catch let error {

debugPrint(error.localizedDescription)

}

}

func canWrite() -> Bool {

return recording && assetWriter != nil && assetWriter?.status == .writing

}

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

connection.videoOrientation = .portrait

guard self.recording else { return }

let writable = self.canWrite()

if writable, self.sessionAtSourceTime == nil {

// start writing

sessionAtSourceTime = CMSampleBufferGetOutputPresentationTimeStamp(sampleBuffer)

self.assetWriter?.startSession(atSourceTime: sessionAtSourceTime!)

}

guard let assetWriterInput = self.assetWriterInput else { return }

if writable, assetWriterInput.isReadyForMoreMediaData {

// write video buffer

assetWriterInput.append(sampleBuffer)

}

}

func start() {

self.recordButton.setTitle("Stop", for: .normal)

self.sessionAtSourceTime = nil

self.setUpWriter()

switch self.assetWriter?.status {

case .writing:

print("status writing")

case .failed:

print("status failed")

case .cancelled:

print("status cancelled")

case .unknown:

print("status unknown")

default:

print("status completed")

}

}

func stop() {

self.recordButton.setTitle("Record", for: .normal)

self.assetWriterInput?.markAsFinished()

print("marked as finished")

self.assetWriter?.finishWriting { [weak self] in

self?.sessionAtSourceTime = nil

}

print("finished writing \(self.filePath)")

}

}

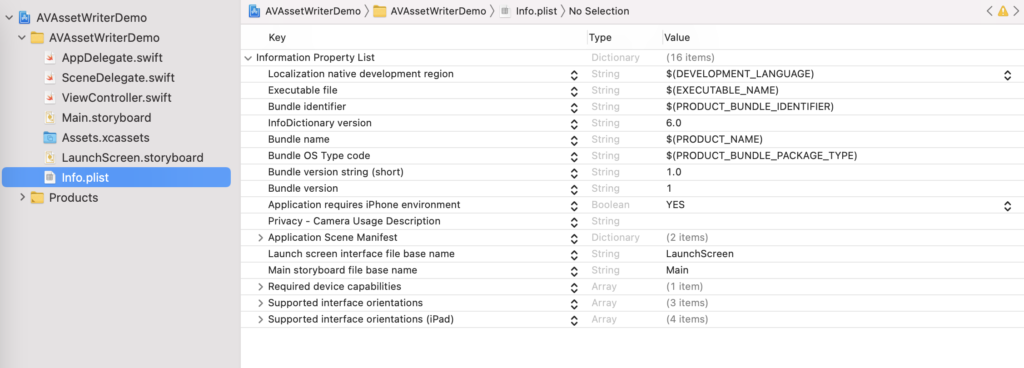

- info.plist

Demo2:这个Demo录制的视频文件有声音

//

// ViewController.swift

// CustomCamera

//

// Created by Taras Chernyshenko on 6/27/17.

// Copyright © 2017 Taras Chernyshenko. All rights reserved.

//

import AVFoundation

import Photos

class NewRecorder: NSObject,

AVCaptureAudioDataOutputSampleBufferDelegate,

AVCaptureVideoDataOutputSampleBufferDelegate {

private var session: AVCaptureSession = AVCaptureSession()

private var deviceInput: AVCaptureScreenInput?

private var previewLayer: AVCaptureVideoPreviewLayer?

private var videoOutput: AVCaptureVideoDataOutput = AVCaptureVideoDataOutput()

private var audioOutput: AVCaptureAudioDataOutput = AVCaptureAudioDataOutput()

//private var videoDevice: AVCaptureDevice = AVCaptureScreenInput(displayID: 69731840) //AVCaptureDevice.default(for: AVMediaType.video)!

private var audioConnection: AVCaptureConnection?

private var videoConnection: AVCaptureConnection?

private var assetWriter: AVAssetWriter?

private var audioInput: AVAssetWriterInput?

private var videoInput: AVAssetWriterInput?

private var fileManager: FileManager = FileManager()

private var recordingURL: URL?

private var isCameraRecording: Bool = false

private var isRecordingSessionStarted: Bool = false

private var recordingQueue = DispatchQueue(label: "recording.queue")

func setup() {

self.session.sessionPreset = AVCaptureSession.Preset.high

self.recordingURL = URL(fileURLWithPath: "\(NSTemporaryDirectory() as String)/file.mp4")

if self.fileManager.isDeletableFile(atPath: self.recordingURL!.path) {

_ = try? self.fileManager.removeItem(atPath: self.recordingURL!.path)

}

self.assetWriter = try? AVAssetWriter(outputURL: self.recordingURL!,

fileType: AVFileType.mp4)

self.assetWriter!.movieFragmentInterval = kCMTimeInvalid

self.assetWriter!.shouldOptimizeForNetworkUse = true

let audioSettings = [

AVFormatIDKey : kAudioFormatMPEG4AAC,

AVNumberOfChannelsKey : 2,

AVSampleRateKey : 44100.0,

AVEncoderBitRateKey: 192000

] as [String : Any]

let videoSettings = [

AVVideoCodecKey : AVVideoCodecType.h264,

AVVideoWidthKey : 1920,

AVVideoHeightKey : 1080

/*AVVideoCompressionPropertiesKey: [

AVVideoAverageBitRateKey: NSNumber(value: 5000000)

]*/

] as [String : Any]

self.videoInput = AVAssetWriterInput(mediaType: AVMediaType.video,

outputSettings: videoSettings)

self.audioInput = AVAssetWriterInput(mediaType: AVMediaType.audio,

outputSettings: audioSettings)

self.videoInput?.expectsMediaDataInRealTime = true

self.audioInput?.expectsMediaDataInRealTime = true

if self.assetWriter!.canAdd(self.videoInput!) {

self.assetWriter?.add(self.videoInput!)

}

if self.assetWriter!.canAdd(self.audioInput!) {

self.assetWriter?.add(self.audioInput!)

}

//self.deviceInput = try? AVCaptureDeviceInput(device: self.videoDevice)

self.deviceInput = AVCaptureScreenInput(displayID: 724042646)

self.deviceInput!.minFrameDuration = CMTimeMake(1, Int32(30))

self.deviceInput!.capturesCursor = true

self.deviceInput!.capturesMouseClicks = true

if self.session.canAddInput(self.deviceInput!) {

self.session.addInput(self.deviceInput!)

}

self.previewLayer = AVCaptureVideoPreviewLayer(session: self.session)

//importent line of code what will did a trick

//self.previewLayer?.videoGravity = AVLayerVideoGravity.resizeAspectFill

//let rootLayer = self.view.layer

//rootLayer.masksToBounds = true

//self.previewLayer?.frame = CGRect(x: 0, y: 0, width: 1920, height: 1080)

//rootLayer.insertSublayer(self.previewLayer!, at: 0)

self.session.startRunning()

DispatchQueue.main.async {

self.session.beginConfiguration()

if self.session.canAddOutput(self.videoOutput) {

self.session.addOutput(self.videoOutput)

}

self.videoConnection = self.videoOutput.connection(with: AVMediaType.video)

/*if self.videoConnection?.isVideoStabilizationSupported == true {

self.videoConnection?.preferredVideoStabilizationMode = .auto

}*/

self.session.commitConfiguration()

let audioDevice = AVCaptureDevice.default(for: AVMediaType.audio)

let audioIn = try? AVCaptureDeviceInput(device: audioDevice!)

if self.session.canAddInput(audioIn!) {

self.session.addInput(audioIn!)

}

if self.session.canAddOutput(self.audioOutput) {

self.session.addOutput(self.audioOutput)

}

self.audioConnection = self.audioOutput.connection(with: AVMediaType.audio)

}

}

func startRecording() {

if self.assetWriter?.startWriting() != true {

print("error: \(self.assetWriter?.error.debugDescription ?? "")")

}

self.videoOutput.setSampleBufferDelegate(self, queue: self.recordingQueue)

self.audioOutput.setSampleBufferDelegate(self, queue: self.recordingQueue)

}

func stopRecording() {

self.videoOutput.setSampleBufferDelegate(nil, queue: nil)

self.audioOutput.setSampleBufferDelegate(nil, queue: nil)

self.assetWriter?.finishWriting {

print("Saved in folder \(self.recordingURL!)")

exit(0)

}

}

func captureOutput(_ captureOutput: AVCaptureOutput, didOutput

sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

if !self.isRecordingSessionStarted {

let presentationTime = CMSampleBufferGetPresentationTimeStamp(sampleBuffer)

self.assetWriter?.startSession(atSourceTime: presentationTime)

self.isRecordingSessionStarted = true

}

let description = CMSampleBufferGetFormatDescription(sampleBuffer)!

if CMFormatDescriptionGetMediaType(description) == kCMMediaType_Audio {

if self.audioInput!.isReadyForMoreMediaData {

//print("appendSampleBuffer audio");

self.audioInput?.append(sampleBuffer)

}

} else {

if self.videoInput!.isReadyForMoreMediaData {

//print("appendSampleBuffer video");

if !self.videoInput!.append(sampleBuffer) {

print("Error writing video buffer");

}

}

}

}

}