FaceFusion版本更新到了2.6.0,下面是安装的一些tips。

安装Conda

FaceFusion舍弃了python 虚拟环境,用了Conda。

curl -LO https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh bash Miniconda3-latest-Linux-x86_64.sh

初始化Conda环境

conda init --all conda create --name facefusion python=3.10 conda activate facefusion

下载代码

git clone https://github.com/facefusion/facefusion cd facefusion

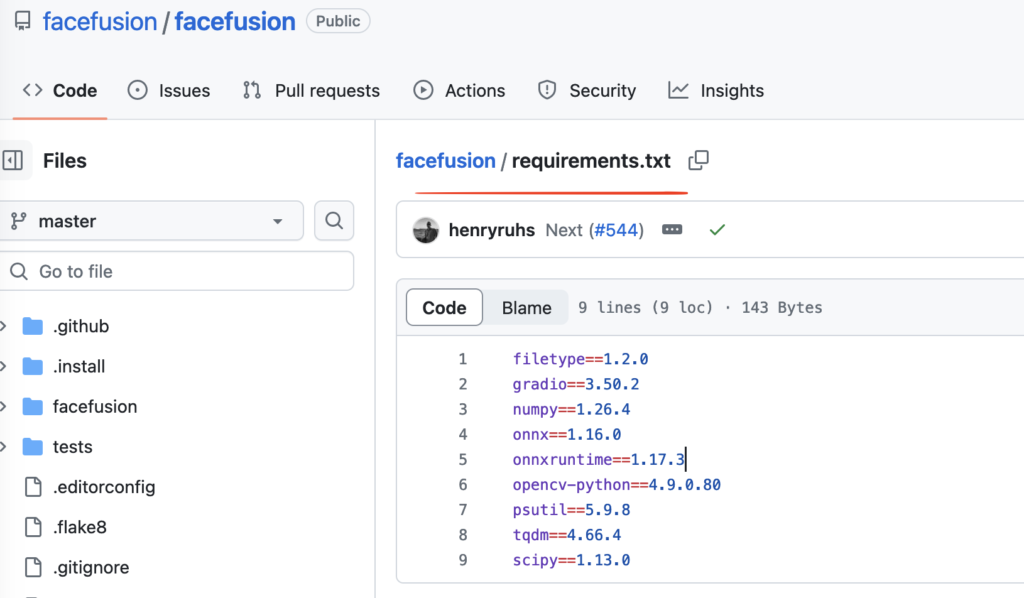

修改requirements.txt,因为我的Centos环境 onnxruntime只能安装到1.16.3

filetype==1.2.0 gradio==3.50.2 numpy==1.26.2 onnx==1.15.0 onnxruntime==1.16.3 opencv-python==4.9.0.80 psutil==5.9.6 tqdm==4.66.1 scipy==1.13.0

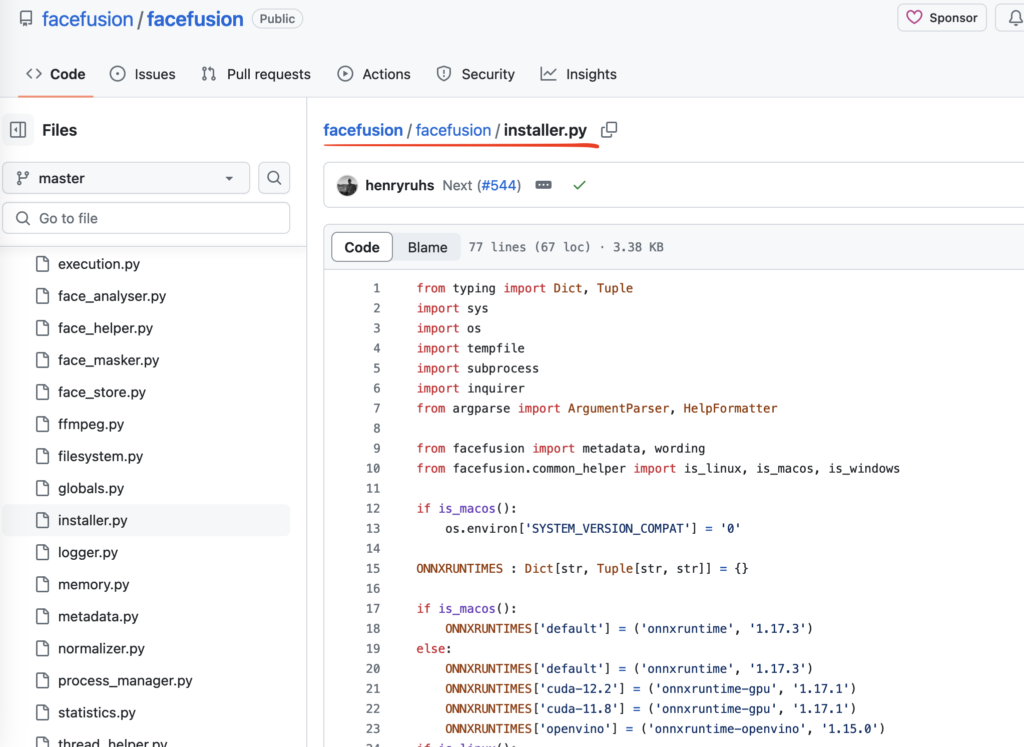

修改 facefusion/installer.py

from typing import Dict, Tuple

import sys

import os

import tempfile

import subprocess

import inquirer

from argparse import ArgumentParser, HelpFormatter

from facefusion import metadata, wording

from facefusion.common_helper import is_linux, is_macos, is_windows

if is_macos():

os.environ['SYSTEM_VERSION_COMPAT'] = '0'

ONNXRUNTIMES : Dict[str, Tuple[str, str]] = {}

if is_macos():

ONNXRUNTIMES['default'] = ('onnxruntime', '1.16.3')

else:

ONNXRUNTIMES['default'] = ('onnxruntime', '1.16.3')

ONNXRUNTIMES['cuda-12.2'] = ('onnxruntime-gpu', '1.17.1')

ONNXRUNTIMES['cuda-11.8'] = ('onnxruntime-gpu', '1.17.1')

ONNXRUNTIMES['openvino'] = ('onnxruntime-openvino', '1.15.0')

if is_linux():

ONNXRUNTIMES['rocm-5.4.2'] = ('onnxruntime-rocm', '1.16.3')

ONNXRUNTIMES['rocm-5.6'] = ('onnxruntime-rocm', '1.16.3')

if is_windows():

ONNXRUNTIMES['directml'] = ('onnxruntime-directml', '1.16.3')

def cli() -> None:

program = ArgumentParser(formatter_class = lambda prog: HelpFormatter(prog, max_help_position = 200))

program.add_argument('--onnxruntime', help = wording.get('help.install_dependency').format(dependency = 'onnxruntime'), choices = ONNXRUNTIMES.keys())

program.add_argument('--skip-conda', help = wording.get('help.skip_conda'), action = 'store_true')

program.add_argument('-v', '--version', version = metadata.get('name') + ' ' + metadata.get('version'), action = 'version')

run(program)

def run(program : ArgumentParser) -> None:

args = program.parse_args()

python_id = 'cp' + str(sys.version_info.major) + str(sys.version_info.minor)

if not args.skip_conda and 'CONDA_PREFIX' not in os.environ:

sys.stdout.write(wording.get('conda_not_activated') + os.linesep)

sys.exit(1)

if args.onnxruntime:

answers =\

{

'onnxruntime': args.onnxruntime

}

else:

answers = inquirer.prompt(

[

inquirer.List('onnxruntime', message = wording.get('help.install_dependency').format(dependency = 'onnxruntime'), choices = list(ONNXRUNTIMES.keys()))

])

if answers:

onnxruntime = answers['onnxruntime']

onnxruntime_name, onnxruntime_version = ONNXRUNTIMES[onnxruntime]

subprocess.call([ 'pip', 'install', '-r', 'requirements.txt', '--force-reinstall' ])

if onnxruntime == 'rocm-5.4.2' or onnxruntime == 'rocm-5.6':

if python_id in [ 'cp39', 'cp310', 'cp311' ]:

rocm_version = onnxruntime.replace('-', '')

rocm_version = rocm_version.replace('.', '')

wheel_name = 'onnxruntime_training-' + onnxruntime_version + '+' + rocm_version + '-' + python_id + '-' + python_id + '-manylinux_2_17_x86_64.manylinux2014_x86_64.whl'

wheel_path = os.path.join(tempfile.gettempdir(), wheel_name)

wheel_url = 'https://download.onnxruntime.ai/' + wheel_name

subprocess.call([ 'curl', '--silent', '--location', '--continue-at', '-', '--output', wheel_path, wheel_url ])

subprocess.call([ 'pip', 'uninstall', wheel_path, '-y', '-q' ])

subprocess.call([ 'pip', 'install', wheel_path, '--force-reinstall' ])

os.remove(wheel_path)

else:

subprocess.call([ 'pip', 'uninstall', 'onnxruntime', onnxruntime_name, '-y', '-q' ])

if onnxruntime == 'cuda-12.2':

subprocess.call([ 'pip', 'install', onnxruntime_name + '==' + onnxruntime_version, '--extra-index-url', 'https://aiinfra.pkgs.visualstudio.com/PublicPackages/_packaging/onnxruntime-cuda-12/pypi/simple', '--force-reinstall' ])

else:

subprocess.call([ 'pip', 'install', onnxruntime_name + '==' + onnxruntime_version, '--force-reinstall' ])

安装依赖

python install.py python run.py

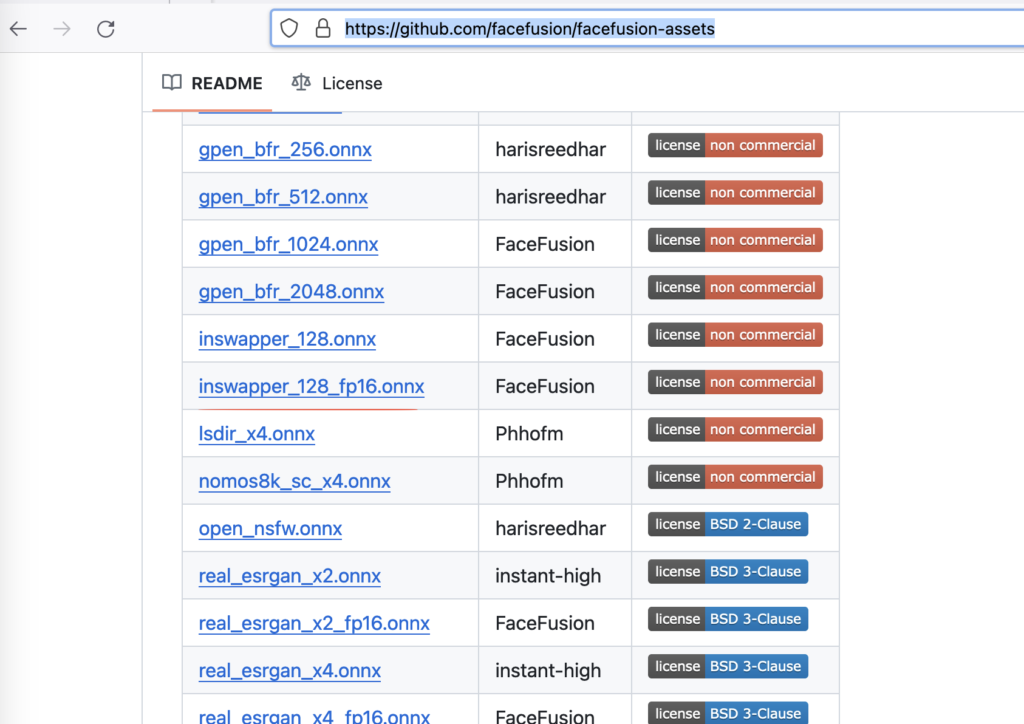

更新模型(重要)

因为用了Conda,模型也更新了

https://github.com/facefusion/facefusion-assets

比如换脸模型inswapper_128_fp16.onnx, 别看名字一样,其实内容已经发生了变化,需要从网站下载,并且更新到/mnt/facefusion/.assets 中。

启动Gradio内网穿透

Gradio是一个用于创建机器学习模型交互式界面的Python库。通过Gradio,你可以为你的模型快速构建一个可视化的、易于使用的Web界面,无需编写任何前端代码。我们将通过Gradio将内网端口7860映射到公网上。

在facefusion/uis/layouts/目录下新建文件share.py

import gradio

from facefusion.uis.layouts import default

def pre_check() -> bool:

return default.pre_check()

def pre_render() -> bool:

return default.pre_render()

def render() -> gradio.Blocks:

return default.render()

def listen() -> None:

default.listen()

def run(ui : gradio.Blocks) -> None:

ui.launch(show_api = False, share = True)

返回到facefusion目录下,重新启动run.py

python run.py --ui-layouts share

完成后,你会看到以下信息:

Running on local URL: http://127.0.0.1:7860 Running on public URL: https://e41b4898c4fad7cc83.gradio.live This share link expires in 72 hours. For free permanent hosting and GPU upgrades, run `gradio deploy` from Terminal to deploy to Spaces (https://huggingface.co/spaces)

现在,你可以通过你的独特的URL连接访问Facefusion界面。